In managerial circles, the activity covered by the term ‘exploratory testing’ has gathered something of a bad reputation. From the perspective of the uninitiated, it might seem as just random clicking and typing until something goes wrong and we can create a bug report. However, this is not the case at all.

Let’s begin with the definition of the term itself, which already might shed a different light on the underlying process:

“Exploratory testing is unscripted, unrehearsed testing. Its effectiveness depends on several intangibles: the skill of the tester, their intuition, their experience, and their ability to follow hunches.”

– James Bach

picture source: https://www.codecentric.nl/2014/01/09/rapid-software-testing-with-james-bach-17-19-november-2014/

For the casual reader, this might sound disorganized and the definition implies a lack of structure. In some cases, this might not be far from the truth, but the goal of this article is to show that this can be avoided. Among other methods, with the application of a certain software testing approach, developed already in the seemingly distant past (ancient history in IT terms).

There are many advantages of exploratory testing and the most obvious ones manifest themselves early in the software development life cycle when documentation and specification materials are not yet readily available, but that’s not all. Exploratory testing, as opposed to the running of scripted tests, needs a lot less preparation. In addition, obvious bugs are found a lot faster, it enables the use of deductive reasoning and is intellectually more stimulating for the tester. Also, after the initial execution of a given set of test cases, most bugs are found by some type of exploratory testing. The reason is that the same set of tests tends to uncover less and less problems over time, while ignores other areas of the software yet to be explored.

picture source: https://i.pinimg.com/236x/18/3a/19/183a19b01507a488b4c537f4476e4f76–grammar-jokes-funny-jokes.jpg

Session-based test management is a software testing approach, or framework, if you will, developed by brothers Jonathan and James Marcus Bach (mentioned above) in the early 2000’s. Both authors are well renowned software testers on a global scale in their own right. Their attempt was not only to bring a sensible degree of structure to exploratory testing, the effectiveness of which depends largely on the skills, experience and intuition of the tester, but also to introduce the accountability so sorely missed by management. Imagine a situation where, when asked about the status at the end of the day, the tester may give an answer like “I played around with this feature and the other”. Even if the testing session yielded multiple serious bug reports, the manager does not necessarily have a clue of what has been tested and how.

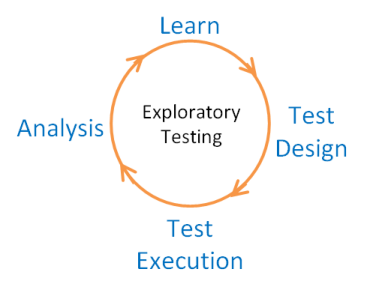

The process can be described as structured exploratory testing, where the structure is not provided by the scripted nature of the test session, but rather by a set of expectations that are defined in advance for each session and concern the nature of the test activity and the manner in which we do the reporting. The testing itself is done in uninterrupted sessions, which can range from 45 minutes to several hours. No matter how long the session is, it is done against a session charter, but testers are allowed to explore new opportunities discovered during the session. While in session, the tester executes tests based on ideas or heuristics and records their progress.

Once the session is concluded, the output takes the form of a session report with all the relevant information about the session and what has been done. Then, the process is concluded with a so-called debriefing between the tester and the test manager.

To put it simply, the session charter is the mission statement created in advance, describing the goal we have set for the current session, e. g. test the View menu of the target application for possible defects. Even though the charter is set beforehand, it can change any time.

The session metrics are, along with other information, staples of the delivered test report and the means to convey the status of the exploratory test process. They are as follows:

The test report document should be standardized with respect to layout and content, so that it is possible to extract and summarize the data with the help of a custom or third-party tool and create meaningful statistics in the most efficient manner.

The session report should contain the following information in addition to the test metrics just mentioned:

picture source: http://1.bp.blogspot.com/-a3EaHdoYNnU/T72xbZUPizI/AAAAAAAAASw/OzKEDlWn1AA/s1600/ET_cycle.png

At the end of each session, the tester and the test manager can get together for debriefing that should take no longer than 15-20 minutes and talk about the activities carried out. This is the best opportunity for the manager to ask any questions he might have concerning the session. It might be worth mentioning the fact that the effectiveness of these debriefings is rather dependent on the ability of the manager to ask the right questions.

This article is in no way an appeal to replace traditional scripted testing practices with the approach described within, rather a recommendation of compromise for it to be applied in moderation alongside them. Both methods have their assets, so it should be possible to take advantage of both by assigning some time for a couple of these sessions every sprint.

If you like this post and want more inspiration, go and pay James Bach a visit.

Do you see yourself working with us? Check out our vacancies. Is your ideal vacancy not in the list? Please send an open application. We are interested in new talents, both young and experienced.

Join us